The Latest Computer Technology You Have to See to Believe

By Andre Infante, Make Use Of, 8 August 2014.

By Andre Infante, Make Use Of, 8 August 2014.

Moore’s Law, the truism that the amount of raw computational power available for a dollar tends to double roughly every eighteen months, has been a part of computer science lore since 1965, when Gordon Moore first observed the trend and wrote a paper on it. At the time, the “Law” bit was a joke. 49 years later, nobody’s laughing.

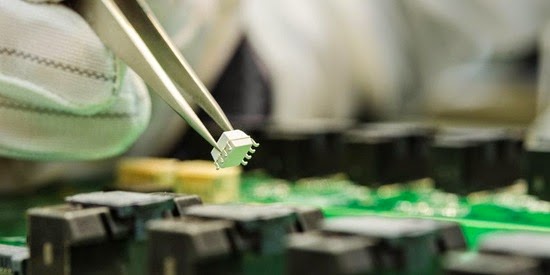

Right now, computer chips are made using an immensely refined, but very old fabrication method. Sheets of very pure silicon crystals are coated in various substances, engraved using high-precision laser beams, etched with acid, bombarded with high-energy impurities, and electroplated.

More than twenty layers of this process occur, building nanoscale components with a precision that is, frankly, mind-boggling. Unfortunately, these trends can’t continue forever.

We are rapidly approaching the point at which the transistors we are engraving will be so small that exotic quantum effects will prevent the basic operation of the machine. It’s generally agreed that the latest computer technology advances will run into the fundamental limits of silicon around 2020, when computers are about sixteen times faster than they are today. So, for the general trend of Moore’s Law to continue, we’ll need to part ways with silicon the way we did with vacuum tubes, and start building chips using new technologies that have more room for growth.

4. Neuromorphic Chips

As the electronics market moves toward smarter technologies that adapt to users and automate more intellectual grunt work, many of the problems that computers need to solve are centred around machine learning and optimization. One powerful technology used to solve such problems are ‘neural networks.’

Neural networks reflect the structure of the brain: they have nodes that represent neurons, and weighted connections between those nodes that represent synapses. Information flows through the network, manipulated by the weights, in order to solve problems. Simple rules dictate how the weights between neurons change, and these changes can be exploited to produce learning and intelligent behaviour. This sort of learning is computationally expensive when simulated by a conventional computer.

Neuromorphic chips attempt to address this by using dedicated hardware specifically designed to simulate the behaviour and training of neurons. In this way, an enormous speedup can be achieved, while using neurons that behave more like the real neurons in the brain.

IBM and DARPA have been leading the charge on neuromorphic chip research via a project called SyNAPSE, which we’ve mentioned before. Synapse has the eventual goal of building a system equivalent to a complete human brain, implemented in hardware no larger than a real human brain. In the nearer term, IBM plans to include neuromorphic chips in its Watson systems, to speed up solving certain sub-problems in the algorithm that depends on neural networks.

IBM’s current system implements a programming language for neuromorphic hardware that allows programmers to use pre-trained fragments of a neural network (called ‘corelets’) and link them together to build robust problem-solving machines. You probably won’t have neuromorphic chips in your computer for a long time, but you’ll almost certainly be using web services that use servers with neuromorphic chips in just a few years.

3. Micron Hybrid Memory Cube

One of the principle bottlenecks for current computer design is the time it takes to fetch the data from memory that the processor needs to work on. The time needed to talk to the ultra-fast registers inside a processor is considerably shorter than the time needed to fetch data from RAM, which is in turn vastly faster than fetching data from the ponderous, plodding hard drive.

The result is that, frequently, the processor is left simply waiting for long stretches of time for data to arrive so it can do the next round of computations. Processor cache memory is about ten times faster than RAM, and RAM is about one hundred thousand times faster than the hard drive. Put another way, if talking to the processor cache is like walking to the neighbour's house to get some information, then talking to the RAM is like walking a couple of miles to the store for the same information - getting it from the hard drive is like walking to the moon.

Micron Technology may break the industry from the regular progression of conventional DDR memory technology, replacing it with their own technology, which stacks RAM modules into cubes and uses higher-bandwidth cables to make it faster to talk to those cubes. The cubes are built directly onto the motherboard next to the processor (rather than inserted into slots like convention ram). The hybrid memory cube architecture offers five times more bandwidth to the processor than the DDR4 ram coming out this year, and uses 70% less power. The technology is expected to hit the supercomputer market early next year, and the consumer market a few years later.

2. Memristor Storage

A different approach to solving the memory problem is designing computer memory that has the advantage of more than one kind of memory. Generally, the trade-offs with memory boil down to cost, access speed, and volatility (volatility is the property of needing a constant supply of power to keep data stored). Hard drives are very slow, but cheap and non-volatile.

RAM is volatile, but fast and cheap. Cache and registers are volatile and very expensive, but also very fast. The best-of-both-worlds technology is one that’s non-volatile, fast to access, and cheap to create. In theory, memristors offer a way to do that.

Memristors are similar to resistors (devices that reduce the flow of current through a circuit), with the catch that they have memory. Run current through them one way, and their resistance increases. Run current through the other way, and their resistance decreases. The result is that you can build inexpensive, high-speed RAM-style memory cells that are non-volatile, and can be manufactured cheaply.

This raises the possibility of RAM blocks as large as hard drives that store the entire OS and file system of the computer (like a huge, non-volatile RAM disk), all of which can be accessed at the speed of RAM. No more hard drive. No more walking to the moon.

HP has designed a computer using memristor technology and specialized core design, which uses photonics (light based communication) to speed up networking between computational elements. This device (called “The Machine”) is capable of doing complex processing on hundreds of terrabytes of data in a fraction of a second. The memristor memory is 64-128 times denser than conventional RAM, which means that the physical footprint of the device is very small - and, the entire shebang uses far less power than the server rooms it would be replacing. HP hopes to bring computers based on The Machine to market in the next two to three years.

1. Graphene Processors

Graphene is a material made of strongly bonded lattices of carbon atoms (similar to carbon nanotubes). It has a number of remarkable properties, including immense physical strength and near-superconductivity. There are dozens of potential applications for graphene, from space elevators to body armour to better batteries, but the one that’s relevant to this article is their potential role in computer architectures.

Another way of making computers faster, rather than shrinking transistor size, is to simply make those transistors run faster. Unfortunately, because silicon isn’t a very good conductor, a significant amount of the power sent through the processor winds up converted to heat. If you try to clock silicon processors up much above nine gigahertz, the heat interferes with the operation of the processor. The 9 gigahertz requires extraordinary cooling efforts (in some cases involving liquid nitrogen). Most consumer chips run much more slowly. (To learn more about how conventional computer processors work, read our article on the subject).

Graphene, in contrast, is an excellent conductor. A graphene transistor can, in theory, run up to 500 GHz without any heat problems to speak of - and, you can etch it the same way you etch silicon. IBM has engraved simple analogue graphene chips already, using traditional chip lithography techniques. Until recently, the issue has been two fold: first, that it’s very difficult to manufacture graphene in large quantities, and, second, that we do not have a good way to create graphene transistors that entirely block the flow of current in their ‘off’ state.

The first problem was solved when electronics giant Samsung announced that its research arm had discovered a way to mass produce whole graphene crystals with high purity. The second problem is more complicated. The issue is that, while graphene’s extreme conductivity makes it attractive from a heat perspective, it’s also annoying when you want to make transistors - devices that are intended to stop conducting billions of times a second. Graphene, unlike silicon, lacks a ‘band gap’ - a rate of current flow that is so low that it causes the material to drop to zero conductivity. Luckily, it looks like there are a few options on that front.

Samsung has developed a transistor that uses the properties of a silicon-graphene interface in order to produce the desired properties, and built a number of basic logic circuits with it. While not a pure graphene computer, this scheme would preserve many of the beneficial effects of graphene. Another option may be the use of ‘negative resistance’ to build a different kind of transistor that could be used to construct logic gates that operate at higher power, but with fewer elements.

Of the technologies discussed in this article, graphene is the farthest away from commercial reality. It could take up to a decade for the technology to be mature enough to really replace silicon entirely. However, in the long term, it’s very likely that graphene (or a variant of the material) will be the backbone of the computing platform of the future.

The Next Ten Years

Our civilization and much of our economy has come to depend on Moore’s Law in profound ways, and enormous institutions are investing tremendous amounts of money in trying to forestall its end. A number of minor refinements (like 3D chip architectures and error-tolerant computing) will help to sustain Moore’s Law past its theoretical six year horizon, but that sort of thing can’t last forever.

At some point in the coming decade, we’ll need to make the jump to a new technology, and the smart money’s on graphene. That changeover is going to seriously shake up the status quo of the computer industry, and make and lose a lot of fortunes. Even graphene is not, of course, a permanent solution. It’s very likely that in a few decades we may find ourselves back here again, debating what new technology is going to take over, now that we’ve reached the limits of graphene.

I really enjoyed reading this blog and really this all the invention and these computer technology really going to change the way we live today.

ReplyDelete